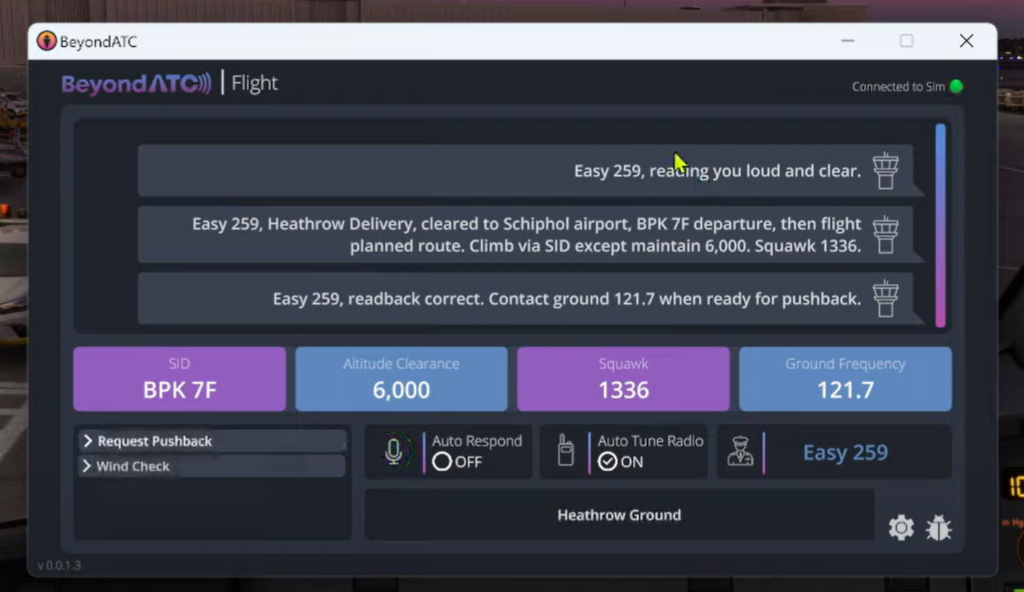

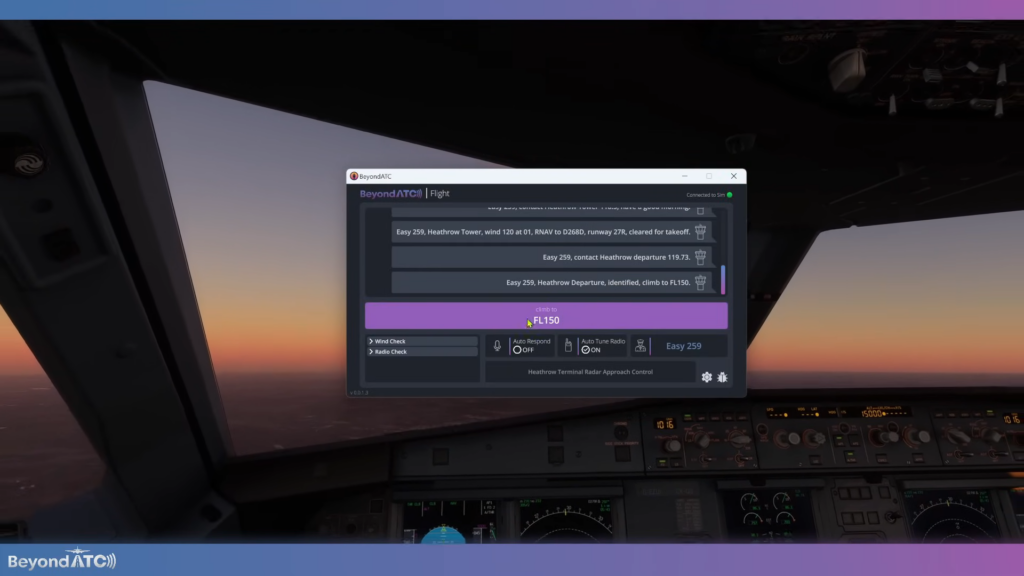

BeyondATC have shared some more information regarding their LLM (Large Language Model) update on Discord. This is coming soon to supporters and eventually to a public release. This short update clarifies some points and provides users with some more context for how LLM will work with BeyondATC, an AI powered ATC program for MSFS 2020 and 2024.

What Will the LLM Do?

LLM will act as an additional logic layer on top of BeyondATC’s core engine. This new feature will enable a powerful new function for the program. However, LLM can not perform tasks that the core program does not support. For example, the LLM can not respond to a request to different vectors, give you an alternative to the instruction given or understand “unable” to a request.

This limitation is down to the core functionality of BeyondATC. As these features have not been implemented, the LLM can not override it. It does not add more actions available to you, but instead expands the language you can use with the program. These limitations help to prevent the LLM from giving nonsensical instructions to pilots.

BeyondATC’s LLM Functionality

LLM brings a major improvement to the understanding of a pilot’s request. It gives them an enhanced understanding and context of your situation. This means BeyondATC can interpret your requests better, even if you have phrased it differently. Instead of getting a “request not understood”, it will now always respond to you.

Building on the core engine, the LLM can contextualise your input and translate it into the actions which are supported by the core program. For example, you could say:

“Can you hear me?”, this would trigger a radio check action within the program instead of asking for a radio check with a more standard phrase. Even if your microphone interprets some of your words, the LLM can still infer your intent. It also allows for more informational inquiries like repeating your squawk code, possible delays or wind directions at an airport.

Final Notes

BeyondATC have designed the LLM to have a minimal impact on your system. This will help to maintain an optimal performance for your simulator, which is their priority. The LLM offloads the heavy lifting to their servers instead of doing it all locally. Having a minimal impact on performance, users will unlikely notice much of a difference.

Similar to their other feature releases, the developers will release the LLM feature into the experimental branch for supporters. This allows them to monitor progress and feedback, making improvements over time. Once the issues have been ironed out and the feature is stable, they will release it into the public branch for free.

Feel free to join our Discord server to share your feedback on the article, screenshots from your flights or just chat with the rest of the team and the community. Click here to join the server.